zabbix-kubernetes-discovery

Zabbix Kubernetes Discovery

Introduction

Kubernetes monitoring for Zabbix with discovery objects:

- Nodes

- DaemonSets

- Deployments

- StatefulSets

- Cronjobs

- PersistentVolumeClaims

- SystemPods

Works with 2 variables only by default:

ZABBIX_ENDPOINT: Zabbix server/proxy where the datas will be sentKUBERNETES_NAME: Name of your Kubernetes cluster on Zabbix (host)

Helm

Before installation, you need to create zabbix-monitoring namespace in your cluster:

$ kubectl create namespace zabbix-monitoring

All Helm options/parameters are available in the Helm folder here.

Install from local

To install the chart with the release name zabbix-kubernetes-discovery from local Helm templates:

$ helm upgrade --install zabbix-kubernetes-discovery \

./helm/zabbix-kubernetes-discovery/ \

--values ./helm/zabbix-kubernetes-discovery/values.yaml \

--namespace zabbix-monitoring \

--set namespace.name="zabbix-monitoring" \

--set environment.ZABBIX_ENDPOINT="zabbix-proxy.example.com" \

--set environment.KUBERNETES_NAME="kubernetes-cluster-example"

Install from repo

To install the chart with the release name zabbix-kubernetes-discovery from my Helm repository:

$ helm repo add djerfy https://djerfy.github.io/helm-charts

$ helm upgrade --install zabbix-kubernetes-discovery \

djerfy/zabbix-kubernetes-discovery \

--namespace zabbix-monitoring

--set namespace.name="zabbix-monitoring" \

--set environment.ZABBIX_ENDPOINT="zabbix-proxy.example.com" \

--set environment.KUBERNETES_NAME="kubernetes-cluster-name"

Uninstall

To uninstall/delete the zabbix-kubernetes-discovery deployment:

$ helm list -n zabbix-monitoring

$ helm delete -n zabbix-monitoring zabbix-kubernetes-discovery

The command removes all the Kubernetes components associated with the chart and deletes the release.

Commands

usage: zabbix-kubernetes-discovery.py [-h]

[--zabbix-timeout ZABBIX_TIMEOUT]

--zabbix-endpoint ZABBIX_ENDPOINT

--kubernetes-name KUBERNETES_NAME

--monitoring-mode {volume,deployment,daemonset,node,statefulset,cronjob,systempod}

--monitoring-type {discovery,item,json}

[--object-name OBJECT_NAME]

[--match-label KEY=VALUE]

[--include-name INCLUDE_NAME]

[--include-namespace INCLUDE_NAMESPACE]

[--exclude-name EXCLUDE_NAME]

[--exclude-namespace EXCLUDE_NAMESPACE]

[--no-wait]

[--verbose]

[--debug]

Zabbix

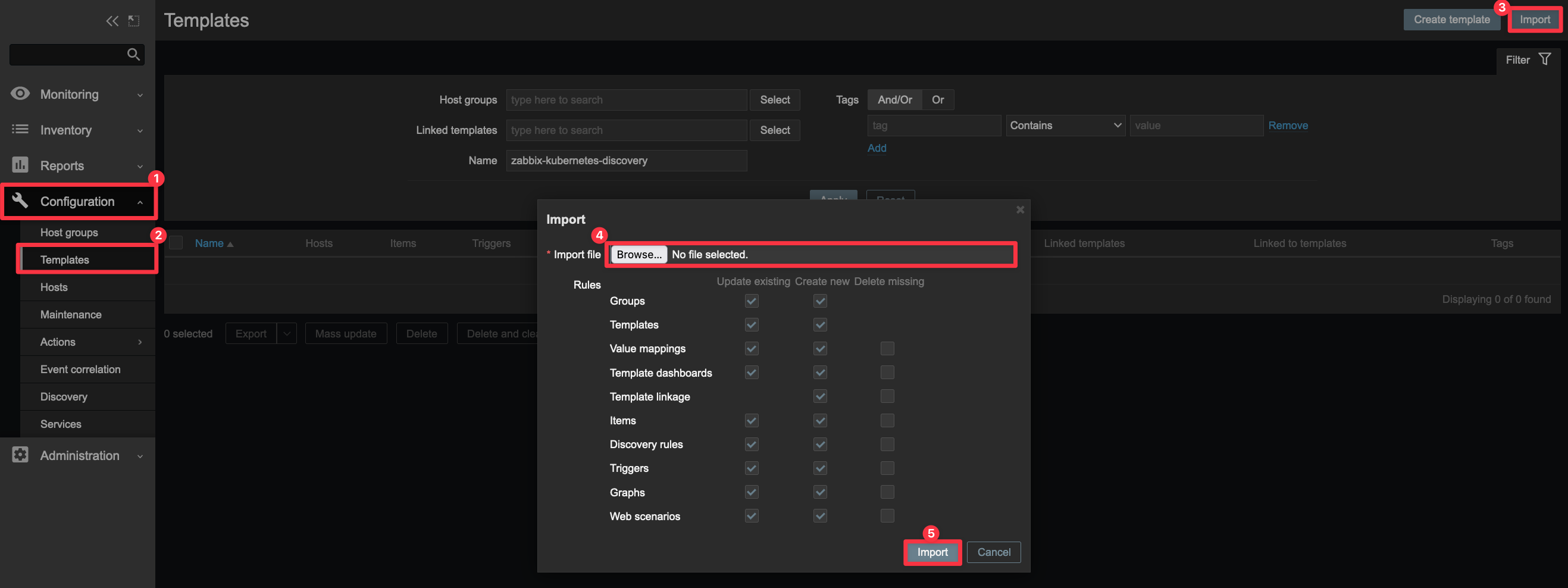

Import template

Zabbix template is located in ./zabbix/ folder on this repository.

After downloading, you need to import it as below:

- Go to Configuration in menu

- And Templates

- Click Import

- Select downloaded template file

- Confirm import

Discovery rules

- Daemonset

- Items: 4

Daemonset {#KUBERNETES_DAEMONSET_NAMESPACE}/{#KUBERNETES_DAEMONSET_NAME}: Available replicasDaemonset {#KUBERNETES_DAEMONSET_NAMESPACE}/{#KUBERNETES_DAEMONSET_NAME}: Current replicasDaemonset {#KUBERNETES_DAEMONSET_NAMESPACE}/{#KUBERNETES_DAEMONSET_NAME}: Desired replicasDaemonset {#KUBERNETES_DAEMONSET_NAMESPACE}/{#KUBERNETES_DAEMONSET_NAME}: Ready replicas

- Triggers: 5

Daemonset {#KUBERNETES_DAEMONSET_NAMESPACE}/{#KUBERNETES_DAEMONSET_NAME}: Available replicas nodataDaemonset {#KUBERNETES_DAEMONSET_NAMESPACE}/{#KUBERNETES_DAEMONSET_NAME}: Current replicas nodataDaemonset {#KUBERNETES_DAEMONSET_NAMESPACE}/{#KUBERNETES_DAEMONSET_NAME}: Desired replicas nodataDaemonset {#KUBERNETES_DAEMONSET_NAMESPACE}/{#KUBERNETES_DAEMONSET_NAME}: Ready replicas nodataDaemonset {#KUBERNETES_DAEMONSET_NAMESPACE}/{#KUBERNETES_DAEMONSET_NAME}: Problem items nodata

- Graphs: 1

Daemonset {#KUBERNETES_DAEMONSET_NAMESPACE}/{#KUBERNETES_DAEMONSET_NAME}: Graph replicas

- Items: 4

- Deployment

- Items: 3

Deployment {#KUBERNETES_DEPLOYMENT_NAMESPACE}/{#KUBERNETES_DEPLOYMENT_NAME}: Available replicasDeployment {#KUBERNETES_DEPLOYMENT_NAMESPACE}/{#KUBERNETES_DEPLOYMENT_NAME}: Desired replicasDeployment {#KUBERNETES_DEPLOYMENT_NAMESPACE}/{#KUBERNETES_DEPLOYMENT_NAME}: Ready replicas

- Triggers: 5

Deployment {#KUBERNETES_DEPLOYMENT_NAMESPACE}/{#KUBERNETES_DEPLOYMENT_NAME}: Available replicas nodataDeployment {#KUBERNETES_DEPLOYMENT_NAMESPACE}/{#KUBERNETES_DEPLOYMENT_NAME}: Desired replicas nodataDeployment {#KUBERNETES_DEPLOYMENT_NAMESPACE}/{#KUBERNETES_DEPLOYMENT_NAME}: Ready replicas nodataDeployment {#KUBERNETES_DEPLOYMENT_NAMESPACE}/{#KUBERNETES_DEPLOYMENT_NAME}: Problem items nodataDeployment {#KUBERNETES_DEPLOYMENT_NAMESPACE}/{#KUBERNETES_DEPLOYMENT_NAME}: Problem number of replicas

- Graphs: 1

Deployment {#KUBERNETES_DEPLOYMENT_NAMESPACE}/{#KUBERNETES_DEPLOYMENT_NAME}: Graph replicas

- Items: 3

- Statefulset

- Items: 3

Statefulset {#KUBERNETES_STATEFULSET_NAMESPACE}/{#KUBERNETES_STATEFULSET_NAME}: Available replicasStatefulset {#KUBERNETES_STATEFULSET_NAMESPACE}/{#KUBERNETES_STATEFULSET_NAME}: Desired replicasStatefulset {#KUBERNETES_STATEFULSET_NAMESPACE}/{#KUBERNETES_STATEFULSET_NAME}: Ready replicas

- Triggers: 5

Statefulset {#KUBERNETES_STATEFULSET_NAMESPACE}/{#KUBERNETES_STATEFULSET_NAME}: Available replicas nodataStetafulset {#KUBERNETES_STATEFULSET_NAMESPACE}/{#KUBERNETES_STATEFULSET_NAME}: Desired replicas nodataStatefulset {#KUBERNETES_STATEFULSET_NAMESPACE}/{#KUBERNETES_STATEFULSET_NAME}: Ready replicas nodataStatefulset {#KUBERNETES_STATEFULSET_NAMESPACE}/{#KUBERNETES_STATEFULSET_NAME}: Problem items nodataStatefulset {#KUBERNETES_STATEFULSET_NAMESPACE}/{#KUBERNETES_STATEFULSET_NAME}: Problem number of replicas

- Graphs: 1

Deployment {#KUBERNETES_STATEFULSET_NAMESPACE}/{#KUBERNETES_STATEFULSET_NAME}: Graph replicas

- Items: 3

- Cronjob

- Items: 3

Cronjob {#KUBERNETES_CRONJOB_NAMESPACE}/{#KUBERNETES_CRONJOB_NAME}: Job exitcodeCronjob {#KUBERNETES_CRONJOB_NAMESPACE}/{#KUBERNETES_CRONJOB_NAME}: Job restartCronjob {#KUBERNETES_CRONJOB_NAMESPACE}/{#KUBERNETES_CRONJOB_NAME}: Job reason

- Triggers: 5

Cronjob {#KUBERNETES_CRONJOB_NAMESPACE}/{#KUBERNETES_CRONJOB_NAME}: Job exitcode nodataCronjob {#KUBERNETES_CRONJOB_NAMESPACE}/{#KUBERNETES_CRONJOB_NAME}: Job restart nodataCronjob {#KUBERNETES_CRONJOB_NAMESPACE}/{#KUBERNETES_CRONJOB_NAME}: Job reason nodataCronjob {#KUBERNETES_CRONJOB_NAMESPACE}/{#KUBERNETES_CRONJOB_NAME}: Problem items nodataCronjob {#KUBERNETES_CRONJOB_NAMESPACE}/{#KUBERNETES_CRONJOB_NAME}: Problem last job

- Graphs: 1

Cronjob {#KUBERNETES_CRONJOB_NAMESPACE}/{#KUBERNETES_CRONJOB_NAME}: Graph jobs

- Items: 3

- Node

- Items: 8

Node {#KUBERNETES_NODE_NAME}: Allocatable cpuNode {#KUBERNETES_NODE_NAME}: Allocatable memoryNode {#KUBERNETES_NODE_NAME}: Allocatable podsNode {#KUBERNETES_NODE_NAME}: Capacity cpuNode {#KUBERNETES_NODE_NAME}: Capacity memoryNode {#KUBERNETES_NODE_NAME}: Capacity podsNode {#KUBERNETES_NODE_NAME}: Current podsNode {#KUBERNETES_NODE_NAME}: Healthz

- Triggers: 8

Node {#KUBERNETES_NODE_NAME}: Allocatable pods nodataNode {#KUBERNETES_NODE_NAME}: Capacity pods nodataNode {#KUBERNETES_NODE_NAME}: Current pods nodataNode {#KUBERNETES_NODE_NAME}: Problem pods limits warningNode {#KUBERNETES_NODE_NAME}: Problem pods limits criticalNode {#KUBERNETES_NODE_NAME}: Health nodataNode {#KUBERNETES_NODE_NAME}: Health problemNode {#KUBERNETES_NODE_NAME}: Problem items nodata

- Graphs: 1

Node {#KUBERNETES_NODE_NAME}: Graph pods

- Items: 8

- VolumeClaim

- Items: 6

Volume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Available bytesVolume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Capacity bytesVolume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Capacity inodesVolume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Free inodesVolume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Used bytesVolume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Used inodes

- Triggers: 11

Volume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Available bytes nodataVolume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Capacity bytes nodataVolume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Capacity inodes nodataVolume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Consumption bytes criticalVolume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Consumption bytes warningVolume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Consumption inodes criticalVolume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Consumption inodes warningVolume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Free inodes nodataVolume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Used bytes nodataVolume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Used inodes nodataVolume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Problem items nodata

- Graphs: 2

Volume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Graph bytesVolume {#KUBERNETES_PVC_NAMESPACE}/{#KUBERNETES_PVC_NAME}: Graph inodes

- Items: 6

- Systempod

- Items: 2

Systempod {#KUBERNETES_SYSTEMPOD_NAMESPACE}/{#KUBERNETES_SYSTEMPOD_NAME}: Desired podSystempod {#KUBERNETES_SYSTEMPOD_NAMESPACE}/{#KUBERNETES_SYSTEMPOD_NAME}: Running pod

- Triggers: 2

Systempod {#KUBERNETES_SYSTEMPOD_NAMESPACE}/{#KUBERNETES_SYSTEMPOD_NAME}: Problem items nodataSystempod {#KUBERNETES_SYSTEMPOD_NAMESPACE}/{#KUBERNETES_SYSTEMPOD_NAME}: Problem pod

- Graphs: 1

Systempod {#KUBERNETES_SYSTEMPOD_NAMESPACE}/{#KUBERNETES_SYSTEMPOD_NAME}: Graph status

- Items: 2

Development

Manual build

You can build Docker image manually like this:

$ docker build -t zabbix-kubernetes-discovery .

Contributing

All contributions are welcome! Please fork the main branch, create a new branch and then create a pull request.